On our website, you'll find frequent reference to the term "magnitude" -- this is a unit of measurement of how bright a star looks to us in the sky. But what does it mean?

The following explanation of what the magnitude measurement scale is and how it was invented is taken from the AAVSO's education project Variable Star Astronomy, Chapter 2:

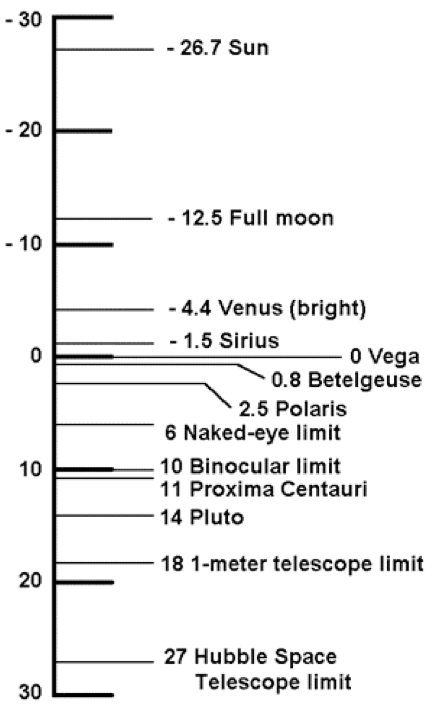

If you have observed the night sky, you have noticed that some stars are brighter than others. The brightest star in the northern hemisphere winter sky is Sirius, the "Dog Star" accompanying Orion on his nightly journey through the sky. In the constellation of Lyra the Harp, Vega shines the brightest in the summer sky. How bright is Sirius compared to its starry companions in the night sky? How does it compare to Vega, its counterpart in the summer sky? How bright are these stars compared to the light reflected from the surface of the Moon? From the surface of Venus?

The method we use today to compare the apparent brightness of stars is rooted in antiquity. Hipparchus, a Greek astronomer who lived in the second century BC, is usually credited with formulating a system to classify the brightness of stars. He called the brightest star in each constellation "first magnitude." Ptolemy, in 140 AD, refined Hipparchus' system and used a 1 to 6 scale to compare star brightness, with 1 being the brightest and 6 the faintest. Astronomers in the mid-1800's quantified these numbers and modified the old Greek system. Measurements demonstrated that 1st magnitude stars were 100 times brighter than 6th magnitude stars. It has also been calculated that the human eye perceives a one magnitude change as being 2.5 times brighter, so a change in 5 magnitudes would seem to be 2.55 (or approximately 100) times brighter. Therefore a difference of 5 magnitudes has been defined as being equal to a factor of exactly 100 in apparent brightness.

It follows that one magnitude is equal to the fifth root of 100, or approximately 2.5; therefore the apparent brightness of two objects can be compared by subtracting the difference in their individual magnitudes and raising 2.5 to the power equal to that difference. For example, Venus and Sirius have a difference of about 3 magnitudes. This means that Venus appears 2.53 (or about 15) times brighter to the human eye than Sirius. In other words, it would take 15 stars with the brightness of Sirius in one spot in the sky to equal the brightness of Venus. Sirius, the brightest apparent star in the winter sky,and the Sun have an apparent magnitude difference of about 25. This means that we would need 2.525 or about 9 billion Sirius-type stars at one spot to shine as brightly as our Sun! The full Moon appears 10 magnitudes brighter than Jupiter; 2.5 equals 10,000, therefore it would take 10,000 Jupiters to appear as bright as the full Moon.

On this scale, some objects are so bright that they have negative magnitudes, while the most powerful telescopes have revealed faint 30th-magnitude objects. The Hubble Space Telescope can "see" objects down to a magnitude of about +30. Sirius is the brightest star in the sky, with an apparent magnitude of -1.4, while Vega is nearly zero magnitude (-0.04).